Just eight months after the launch of ChatGPT 3.5, OpenAI announced the latest model of ChatGPT: ChatGPT 4 omni or (4o), boasting twice the speed as the previous model for half the operating cost.

Introducing ChatGPT 4o

Twice the Speed

One of the key improvements of ChatGPT 4o is its enhanced processing speed. Users can now receive responses twice as fast as those generated by ChatGPT 3.5. To showcase the new processing speed, Open AI released a demo showing how ChatGPT 4o can be used to translate live conversations between two people. Although the previous models could translate, the long processing time made it feel clunky and not particularly useful for real conversations. Check out how quickly and seamlessly the new ChatGPT4o can translate a live conversation:

Multimodal Integrations

ChatGPT 4o has integrated multimodal input streams into a single cohesive context. Multimodal refers to the ability to handle and integrate multiple types of data or input modalities. This means that ChatGPT 4o can process various forms of input such as:

- Text: Written content like articles, chat messages, or documents.

- Images: Photographs, diagrams, or any visual data.

- Audio: Spoken language, sound recordings, or any auditory information.

- Video: Moving visuals that may include both images and sound.

Imagine working with a document that includes a complex diagram and narrated voice instructions. Previously, these different types of inputs would need to be handled in separate contexts.

With ChatGPT 4o, all these inputs—text, diagrams, and voice—are processed together. This integration allows for a more natural interaction with the AI, enabling it to understand and respond to complex queries more accurately.

With ChatGPT-4’s improved visual capabilities, you no longer need to craft the perfect query; simply show ChatGPT-4, and it can assist you, like helping solve a math equation you’ve written and providing hints along the way. Take a look:

Multi-Model Output Capabilities

ChatGPT 4o can generate text, diagrams, and audio, all within the same contextual framework. This means you can receive complete responses that include detailed textual explanations, illustrative diagrams, and even narrated audio, all tailored to your specific request.

For instance, if you ask ChatGPT 4o to explain a scientific concept, you could receive a textual description, a diagram illustrating the concept, and an audio narration that guides you through the explanation. Here is an example of 4o’s visual output capabilities from Open AI’s demo:

Microsoft Copilot Integration

Microsoft Copilot will soon be incorporating the capabilities of ChatGPT 4o. This integration aims to bring the enhanced speed, and multimodal capabilities of ChatGPT 4o to a wider audience.

By integrating multimodal inputs and outputs, ChatGPT 4o aims to offer a more natural and efficient user experience. The upcoming integration with Microsoft Copilot further underscores OpenAI’s goal of integrating ChatGPT seamlessly into the tools we already use every day.

What This Means for IT Support

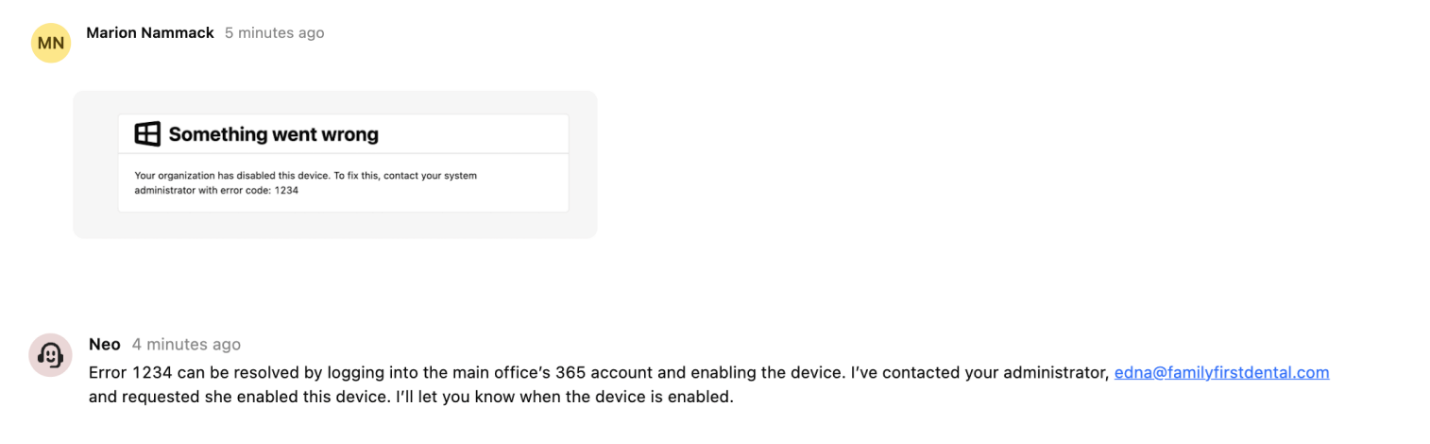

LLMs (Large Language Models) are already changing the game for IT teams. Marion Nammack, a co-founder at Neo Agent, an AI-powered workflow automation platform for MSPs, has seen Neo’s AI features save MSPs more than 13 hrs/week. We got her take on how these exciting updates will empower IT teams:

- Visual Troubleshooting: Useful for diagnosing issues from screenshots or photos. Helping customers solve issues by analyzing images of products or error messages and providing step-by-step instructions. Identifying a ticket resolution by finding completed tickets with similar screenshots.

- Voice Troubleshooting: Talk through your issue instead of typing it and receive audio advice in real-time.

- Product Information, SOPs, Documentation: Generating detailed product descriptions or manuals by combining text with images.

- Incorporate images into text-based AI workflows: Descriptive text generated from screenshots or images in Teams, Slack, ITGlue etc. can be used for ticket similarity search and suggested ticket resolutions, increasing the effectiveness of these features.

Below is a mockup of what multimodal capabilities could look like for an IT support use case:

Implement AI The Right Way

Watch our 2024 AI Update Webinar to get a candid update on our project to implement AI across our business. We’ve reached our first project milestone and things have not gone as we anticipated. We want to share our AI journey, warts and all, because we believe that everyone who is interested in AI will benefit from the lessons that we have learned the hard way.