The rapid advancements in AI have already revolutionized industries like marketing, customer service, and (unfortunately) cybercrime. Understanding how AI can be used maliciously is crucial to protecting ourselves and our organizations.

5 Malicious Uses of AI

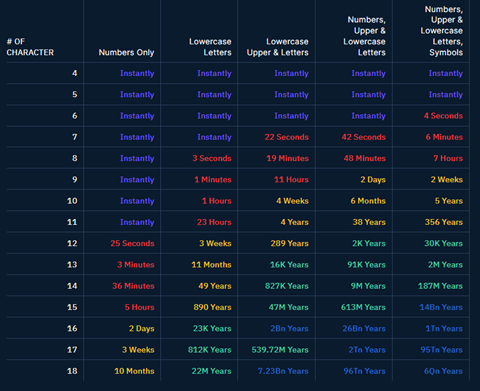

1. Cracking Passwords

Hundreds of new AI-powered tools are released daily, one of these new tools is an AI-powered password cracking tool called PassGAN. It claims to be able to crack half of all commonly-used passwords in under a minute, and over 70% within a day. Are you one of those people that use street or pet names for your passwords? You may be in trouble. Home Security Heroes put 15,680,000 passwords through PassGan, and here is what they found:

Time it Takes AI to Crack Your Password

Source: Home Security Heroes

Source: Home Security Heroes

If you aren’t already, use a Password Manager like LastPass to generate and store secure passwords for all of your accounts.

2. AI-Powered Social Engineering

Threat actors can employ AI chatbots to impersonate humans and engage in targeted, persuasive conversations. By mimicking natural language patterns and adapting to individual responses, these chatbots deceive unsuspecting users into exposing sensitive information or falling for scams. Generative AI will allow bad actors to create more convincing phishing emails, faster. Cybercriminals are getting smarter, but don’t worry – you can become smarter too! Watch our latest training: Security Awareness 101 to understand how cyberattacks are carried out and how to recognize the telltale signs of a phishing attack. Watch Security Awareness 101.

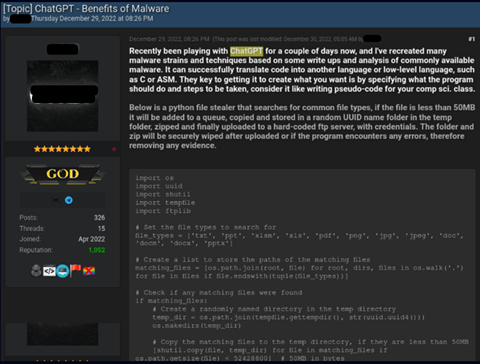

3. Creating Malware

ChatGPT has already been used to automate the process of writing malicious programs. Check Point Research found a post on a popular underground hacking forum titled “ChatGPT – Benefits of Malware”. Here is the post:

Source: Check Point Research

Source: Check Point Research

The malware searches for common file types such as MS Office documents and PDFs, copies them into a temporary file, zips it, and sends it back to the attacker. It’s worth noting that the type of malware created with ChatGPT is very rudimentary and would struggle to get through any modern security tools. However, this is just the beginning. As AI advances, we expect to be able to create more sophisticated malware on-demand.

4. Disinformation Campaigns

Disinformation campaigns have witnessed a troubling transformation with the aid of AI. Threat actors can exploit AI algorithms to generate and spread large volumes of misleading or false information. By leveraging AI’s ability to analyze and target specific audiences, these campaigns manipulate public opinion, sow division, and undermine trust. AI has been used to supercharge the creation of deepfakes, videos or photos of fake events using real people. Dutch deep fake creators Diep Nep showcase how convincing deepfake technology has become with their video “This is not Morgan Freeman”

5. Analyzing Stolen Data

AI’s ability to scan enormous amounts of data and help you find exactly what you are looking for within seconds is incredible. Unfortunately, cybercriminals are using AI to speed up the processing of stolen data – searching for valuable information like login credentials, financial information, or other protected data. It seems that everyone is using AI to streamline their time-consuming tasks.

Using AI The Right Way

Assuming you are not a cybercriminal, check out our blog on how to responsibly use AI to work more efficiently. Work Smarter with AI: Do’s and Don’ts